Future directions for evidence-informed policy

Dear Partners,

As I wrap up an incredible five years at the Hewlett Foundation and gear up for a new job at the Open Philanthropy Project, I’ve been reflecting on which developments in the evidence-informed policy field I’m most excited about. Top of mind is your work on responsive, decision-focused evidence. To celebrate and spread the word, I wrote a blog last week about why I’m inspired by these new developments (spoiler alert: because of the impact you have achieved) and why now is a crucial moment for greater investment in more responsive decision-focused evidence.

This follow-up note is for you: organizations in the evidence field who have helped make this shift happen, including funders who support them. I’ll share ways I think practitioners can increase impact, including by improving the odds of raising more funding to fuel success.

What do I mean by “responsive decision-focused evidence,” and why does it matter?

Responsive, decision-focused evidence brings together a wide range of methods to produce and use quality evidence that is relevant, timely, and less costly. In short, we are talking about faster and cheaper evidence, starting with the decision that needs to be made, not academic questions — meaning it’s more likely to be used. Leading practitioners are using data sources and methods like machine learning, satellite or telecom data, quick syntheses of existing studies’ results, and relational tools (such as close, long-term partnerships) to help governments learn faster and more effectively how to improve lives.

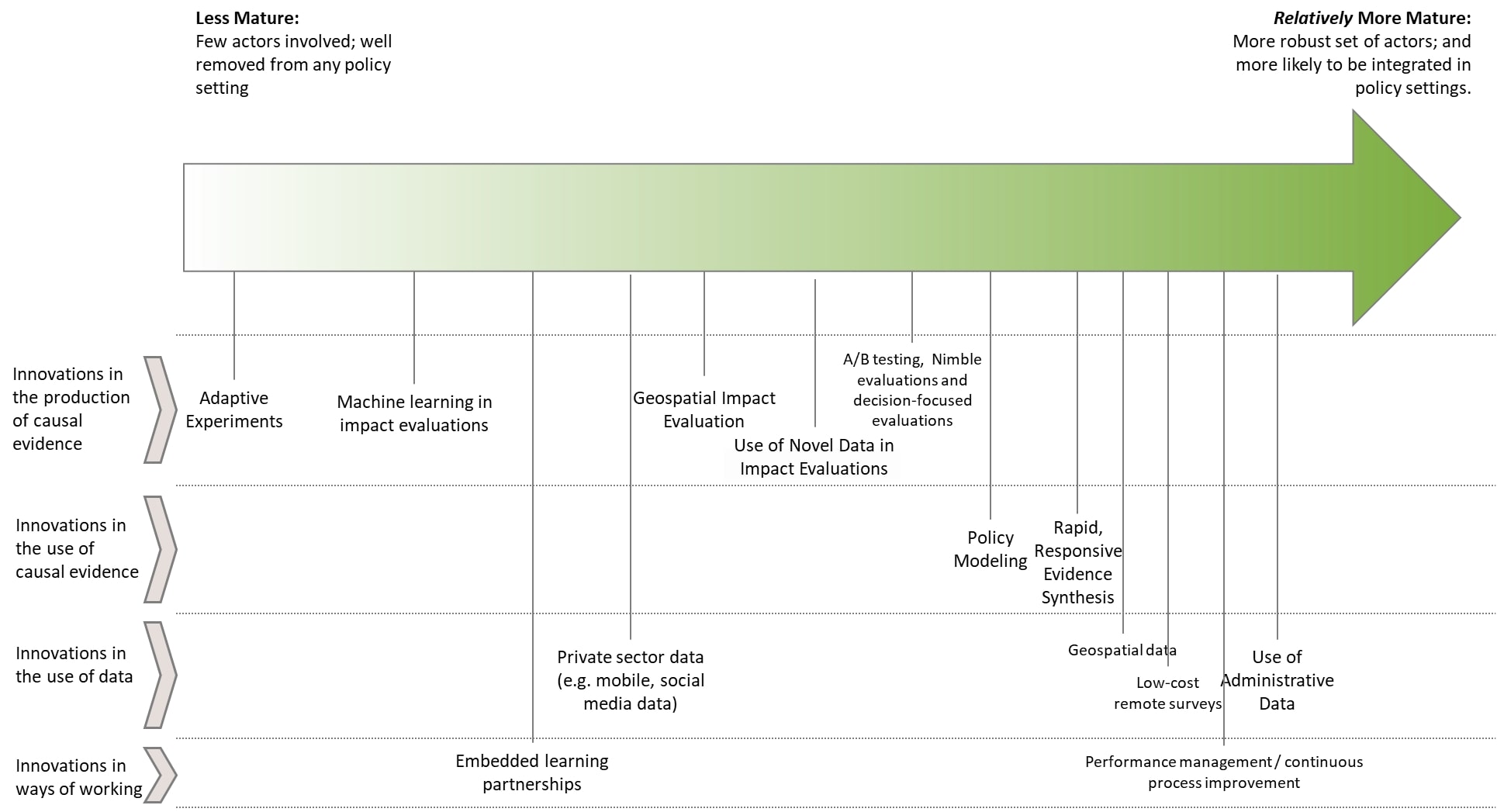

Maturity of methods in the responsive, decision-focused evidence toolkit. See, Responsive, Decision-focused Evidence: A Landscape Analysis.

I define “quality evidence” as information that will improve real-world outcomes. Quality is not just about data quality and methodological rigor but also relevance and timeliness. Rigorous evidence delivered too late means missed opportunities to improve people’s lives. At the same time, we must be honest about methodological limitations when making tradeoffs between speed, rigor, and relevance.

As an example, in 2014 the Zimbabwean Ministry of Finance wanted to understand how much a proposed cash transfer program would improve local economies. Rather than saying there was no answer to that question, the Transfer Project and UNICEF used locally relevant data, combined with data from past impact evaluations, to give the best answer they could to that question — while being transparent about the limitations of their approach. This work ultimately contributed to the Ministry of Finance doubling the money it allocated for the program.

Our consultant partner Michael Eddy, who spoke with many of you, summarizes these methods and notes how they contribute to either better, faster, or cheaper evidence use, and that Center for Global Development recently released complementary pieces. I learned a lot from this work found, and I think you will, too. The pieces began through the lens of impact evaluation, and also includes other innovations in data use and ways of working. The figure above maps some of these new methods based on their level of maturity.

What’s next? What should we start, stop, and keep doing?

Responsive, decision-focused evidence is just one exciting new direction that the evidence-informed policy community is moving toward. As I reflect on my time at Hewlett, here is what I suggest the evidence community continues, stops, and starts doing to be even more effective in improving outcomes for people, policies, and institutions:

We should continue:

- Strategically working so that success begets success: We have heard from many grantee partners that when they respond to policymakers’ needs, build trust-based relationships, and provide useful evidence informed by policymakers’ own constraints, they develop relationships that can lead to greater policy influence over time, and grow the community of policymakers who are receptive to (or even demand) evidence.

- Exploring whether and how to institutionalize rapid evidence use in governments: The efforts of the African Institute for Health Policy and Systems’ partnership with the Nigeria parliament and Twende Mbele’s work on rapid evaluations demonstrate that early progress to change governments’ capacities, systems, and incentives can be made with concerted effort. Changing the inner workings of governments is long-term, ambitious work — and as with all such efforts, we need to learn to what extent and how this has impact.

- Being transparent about methodological limits: Informing immediate decisions often requires providing analysis based on incomplete information — for example projecting the potential long-term effects of a given program based on evidence from the first year of implementation and from other settings. Being responsive does not mean overpromising or glossing over nuance. The field should continue to be transparent about the strengths and limitations of the available evidence.

We should stop:

- Arguing about methods. The field has made real progress, but we still spend too much time criticizing each others’ work. While debate over whether a specific alternative approach is better for addressing a particular policy question can be helpful, reiterating long-standing critiques of methods like randomized trials or systematic reviews is rarely constructive.

- Saying we have no evidence. If your organization does not feel well-positioned to comment on a particular policy problem, that is reasonable. But we seldom have no evidence. COVID showed ways to better flex analytic muscle while incorporating a range of methods and types of evidence to inform decision making.

We should start:

- Ruthlessly prioritizing based on what is likely to improve people’s lives the most. For most of you, the demands on your organization and your own ambitions for impact are far greater than what your current resources allow you to do. These constraints and the multitude of opportunities require ruthless prioritization. For the field to be successful, it must demonstrate that it can tangibly improve lives. It is not obvious to me that this is how many organizations in the field currently prioritize their work (even after accounting for constraints like funder interest). If you do make decisions based on where you expect to have the greatest impact on lives, please communicate that more openly (including with your future program officer!).

- Shifting the gaze. Evidence produced and published for international audiences often shapes what gets put in and left out of publishable papers. As I’ve read over numerous practitioners’ policy memos and PowerPoint presentations, I’ve observed that some do not contain local data or context — even when the underlying research was never intended for publication. The work that I find most useful when I’m a decision maker is informed by a strong understanding of the local context and institutional environment (including what’s been tried before and how a given problem is currently being addressed). I’m also more likely to use analyses that consider the trade-offs between different policy options going forward.

- Sharing policy wins and connecting that to funding requests. We have seen again and again how flexible resources have enabled organizations to be more responsive and take advantage of opportunities that would not be possible with only restricted funds. Of course, funders should continue reforming their own practices, both through offering general operating support and flexibility in program and project grants. This kind of change is much more likely if practitioners identify and share compelling stories about how flexible resources better enabled them to achieve impact — and where a lack of flexible resources led to missed opportunities.

Thank you for allowing me to support your extraordinary work. I’m excited to see what you do next.

Warmly,

Norma

Special thanks to Michael Eddy for his input on this piece.